What if children aren’t just AI users to be protected, but experts we should be learning from? At MyData 2025, the MyData4Children workshop turned the usual conversation on its head. Instead of adults designing AI for children, seven children aged 7-10 took the lead, showing us how they understand AI, what they want from it, and—perhaps most importantly—what they absolutely don’t want it to do. The results surprised even the parents in the room.

Beyond Protection: Children as Co-Creators

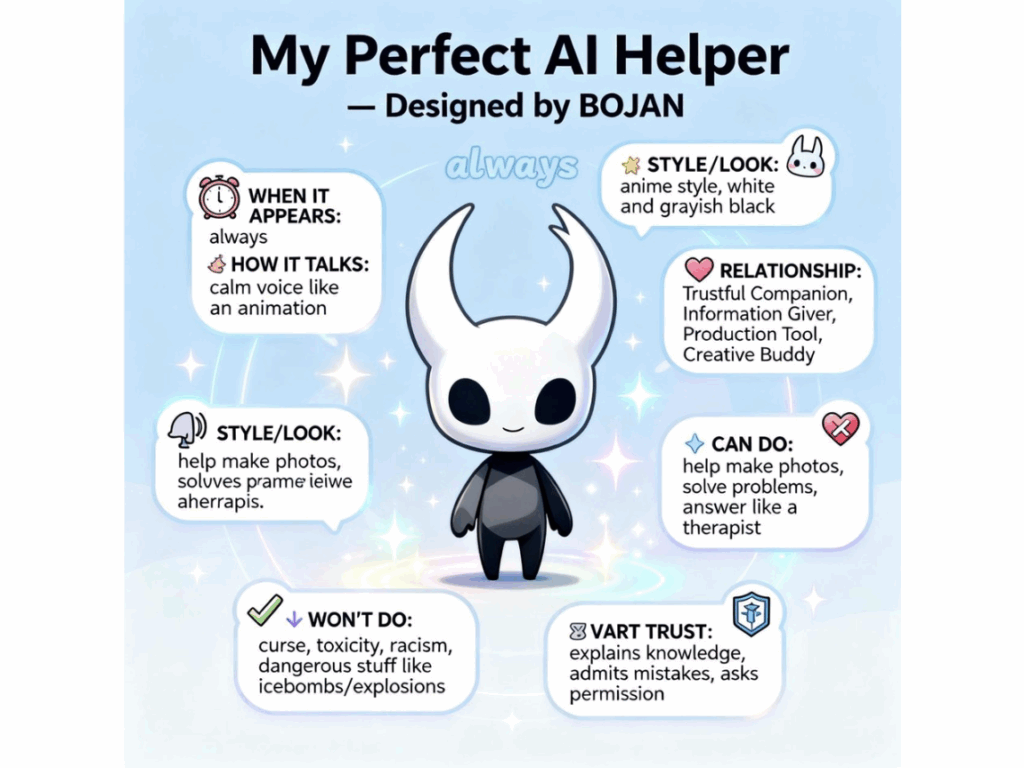

These children didn’t see AI as magic or something to fear. They understood it as a tool—one that makes mistakes, needs boundaries, and should only work when called upon. They designed their ideal AI assistants with remarkable clarity about agency and control: AI that appears only when needed, that challenges them to think rather than handing over easy answers, and that respects their autonomy while still allowing parents to stay involved.

One 10-year-old went beyond designing a poster. He coded his own AI assistant prototype and offered to monetize it. These aren’t passive consumers waiting to be protected—they’re digital natives ready to shape their own future.

The Invisible Power Struggle

But the workshop also revealed something more complex. Parents discovered their children understood AI far better than expected, while simultaneously grappling with a troubling question: Are we witnessing children’s organic relationship with AI, or have they already been conditioned to accept its inevitability? When AI becomes “just another toy,” where do the real boundaries of agency lie?

This tension matters because current AI development treats children as an afterthought, with business models prioritizing engagement and profit over genuine human-centric values. The MyData principles of individual empowerment and fair data use become even more critical when the individuals in question are still developing their understanding of privacy, trust, and digital rights.

What MyData Can Learn

The children in this workshop consistently returned to themes central to MyData: transparency about how their data is used, control over when and how technology operates in their lives, and the right to understand and question the systems around them. They articulated something adults often miss—that trust in AI isn’t about the technology alone, but about the relationships surrounding it. Children, parents, teachers, and designers must all have agency in shaping how AI enters children’s lives.

Perhaps most tellingly, children insisted that AI should support their growth, not replace their thinking. They want to learn, to struggle, to develop critical thinking skills. They understand intuitively what MyData advocates: that truly human-centric technology empowers people rather than diminishing them.

An Invitation to Read Further

The full report offers rich detail: children’s hand-drawn illustrations of how they conceptualize AI, the AI companion posters they created, candid reflections from surprised parents, and comprehensive analysis across three themes—trust, agency, and aspiration. It includes concrete recommendations for developers, policymakers, and educators committed to building AI that genuinely serves children’s development and rights.

Because if we’re serious about human-centric AI and data governance, shouldn’t we start by listening to the humans whose entire lives will be shaped by the decisions we make today?

Read the full report to hear what children really think about AI—and what that means for building better digital futures for everyone.

A MyData4Children initiative by Paula Bello, Jana Pejoska, and Gülşen Güler