Healthcare AI is scaling faster than our ability to trust it. We need to avoid slop to continue the journey of Evidence-based medicine (EBM).

Across medicine and life sciences, we are still living with a replication crisis: studies that cannot be reproduced, opaque data pipelines, and statistical shortcuts that quietly turn into clinical “truths.” When these weaknesses are embedded in AI systems, they do not disappear — they scale.

The Nordics are in a unique position to do better.

A lesson from medicine: reproducibility matters

The well-known fMRI cluster failure showed how widely used analytical methods produced false positives for years before being detected. The technology was not the problem — governance, transparency, and provenance were.

AI in healthcare faces a similar risk. If we train decision support, diagnostics, or triage systems on data we cannot trace, consent we cannot explain, or evidence we cannot reproduce, we undermine evidence-based medicine itself.

Bringing AI governance into clinical reality

In the recent work of a MyData Nordic Hub pilot project, we propose a Nordic, human-centric AI governance model that is designed explicitly for EHDS use, not just compliance. It builds on:

- MyData principles and the Data Transfer Initiative principles on AI: patients control access, portability, and reuse of our data

- EHDS, GDPR, AI Act, eIDAS 2.0: aligned with where European healthcare regulation is actually going

- Existing Nordic infrastructures: Work by Kanta, 1177/NPÖ, Findata, SciLifeLab, Norwegian Health Net — not greenfield fantasies

At the core is a simple idea many clinicians and citizens already understand:

Accountability and reliability of patient data.

To ensure valuable data interoperability for both clinicians and citizens. To leverage the power of AI, accountability and reliability of the data to be shared and used is critical.

Six layers of trust — applied to care delivery

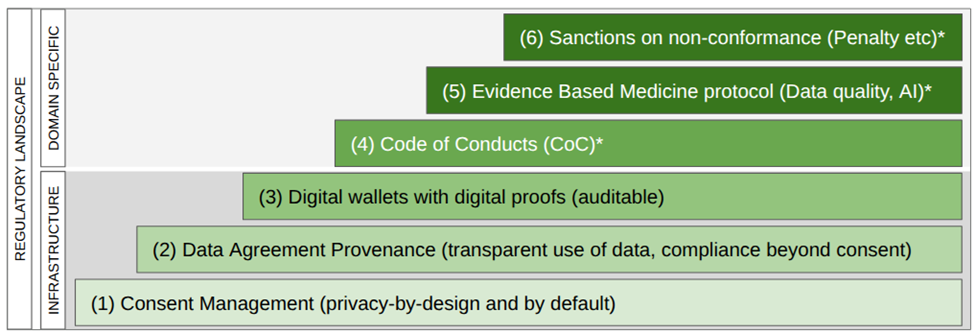

To make this operational, governance must be embedded into everyday workflows. To facilitate ethical decisions and legal compliance while ensuring efficient data sharing in the ecosystem. We propose to build and improve on a model proposed in CRANE, a PCP project for self-monitoring. It defines three infrastructure safeguards and three Domain specific. To further build trust in a health data ecosystem, we try to further define levels 4 – 6 at the dawn of AI globally.

Figure 1: Six levels of trust in a health dataspace, with robust governance in all layers of the stack

- Trust that works

Dynamic, machine-readable legal basis eg. consent based on ISO 27560 replaces unreadable T&Cs. Patients can meaningfully allow or refuse sharing and secondary use — without blocking care. The consent is connected to the given patient data. - Data agreements and provenance

Every dataset and AI model must have a transparent and traceable origin: who accessed what, under which legal basis, and for which purpose. Based on MyTerms (IEEE P7012) and an updated ISO 27560 TS. - Digital wallets and Apps for patients and organisations

EUDI wallets enable cross-border use of IPS and ePrescriptions applications while preserving privacy and professional accountability. - Codes of conduct and oversight

Healthcare AI needs domain-specific codes of conduct (EUCROF) and independent watchdog functions — not generic tech ethics. - Evidence-based AI

Standards like FHIR IPS, openEHR, and OMOP are not “IT choices” — they are prerequisites for clinical reproducibility and explainability. - Predictable enforcement and incentives

Trustworthy AI must be rewarded in procurement and certification, not treated as optional “extra compliance.”

The model proposes an extended model of trust in health data-interoperability and use of AI. It can cover many architectural choices from ZeroTrust to decentralized while also being able to bridge to more federated solutions.

What this changes in medical practice

If implemented correctly, this model enables:

- Safer clinical decision support built on reproducible evidence and data quality assurance

- Patient-generated data (e.g. diabetes, wearables) used responsibly at scale and with transparency

- Cross-border continuity of care via IPS documents, ePrescriptions and secure patient data sharing.

- AI that clinicians can question, audit, and improve — instead of blindly having to trust or retake unnecessary tests. Costly for the system and annoying for the patient.

Nordic pilots like Crane and Swedish national system demonstrators already show this is feasible, not theoretical.

Why this matters now

- EHDS will soon give patients enforceable rights to access, copy, and port their health data. Healthcare systems and AI solutions that ignore this reality should not survive.

- The real opportunity is to turn regulation into better medicine:

more trustworthy data, clearer clinical accountability, and AI that strengthens — rather than weakens — evidence-based practice.

The Nordics can help set that standard globally — if we insist on governance that works where it matters most: From the point of care to the board room.

Fredrik Lindén, founder of Hamling, wrote this article and incorporated feedback from Louise Helliksen (CEO of EYD).

The featured photo by National Cancer Institute on Unsplash.

This project has received funding from the Nordic Culture Point.

Sign the update MyData Declaration

The MyData Declaration serves as the founding document of the global MyData movement, uniting a community of citizens, entrepreneurs, activists, academics, corporations, public agencies, and developers in a common mission. Sign the Declaration here.